“Weather Forecasting”

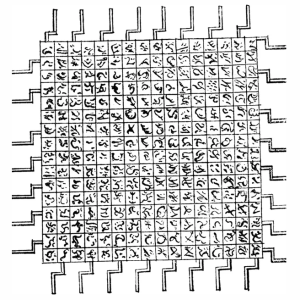

Photo by: NOAA, Showing weather radar from July 13, 2025

By Scott Hamilton

In light of the recent weather events that have swept across our great nation in the last few months, I felt it might be a good time to talk about the science behind weather forecast models and give some insight into the challenges behind the technology. It amazes me that in the midst of disaster, people in general are always looking for someone to blame. In the case of the most recent deadly flooding across Texas, fingers are being pointed at budget cuts to the National Weather Service. The most interesting part of this blame game is that there were minimal budget cuts to the program and those cuts do not go into effect until the end of July 2025. The real blame lies much deeper and older than recent budget cuts. It all links back to the various weather forecast models, how they differ and how we pick which one to follow for a given weather event.

Forecast models fall into three major categories: global models that cover the entire globe, mesoscale models that focus on specific regions and microscale models that focus on specific cities, or even neighborhoods. Each of these models vary in accuracy based on the size of the models, size of the region and the amount of data being collected and fed into the model. For example, if you want to forecast the weather in Licking, Mo., how far around Licking do you gather your weather data? Should you only look at historic records for Texas County, or add in the surrounding counties? Do you focus on counties to the west, or should you also include counties to the north, south and east? How much data do you collect, temperature, humidity, wind speed and direction? How far back in time do you look – 1 year, 10 years, 50 years, 100 years, or as far back as you have accurate records? Do you look at every weather sensor station in the region, or just a select few?

As you can see from the above list of questions, weather forecasting is not a simple problem to solve. We have very limited history, with accurate data going back only a few hundred years at best, and the accuracy of the data has changed over time. The two mostly widely recognized weather models are the European Center for Medium-Range Weather Forecasts (ECMWF) and the United States’ Global Forecast System (GFS). These models are based off of historically accurate mathematical models that have been trusted for generations. Then we have Climavision’s numerical weather prediction (NWP) which utilizes cutting edge AI technology to deliver precision forecasts. These are just a few of the hundreds of different forecast models out there, and the National Weather Service looks at the data coming from several models before making a forecast.

In the recent flooding event in Texas, a majority of the weather models got the forecast completely wrong. Most predicted much less rainfall and the storms landing further north, with minimal impact on the flood plain. There were two models that predicted the location correctly, and only one that predicted the potential for the disastrous flooding. The Canadian GEM Nomogram Forecast model predicted the exact location of the storm but overshot the rainfall amounts, making the forecast seem unreasonable in comparison to the other models; not only did it show a much more southern track to the storm, but predicted an unbelievably high rainfall amount. This time we can say the forecasters at the National Weather Service should have followed the one unique forecast model over the fifteen models with a different prediction.

So why do all these models have different forecasts and apparently conflicting information? It comes down to three main factors. The first is the amount of data utilized to create the model. This relates to the resolution of the weather data. You can think of it like this – it is impossible for the models to know the exact temperature and humidity at every point in the region. It would be like expecting you to read an entire set of encyclopedias, or for those without the history of owning a set, the entire content of Wikipedia. None of us have done that and likely never will, but we have read a sampling of the information. Weather forecast models do the same thing; they can’t look at all the information so they select certain parts of it to focus on. For example, you might decide to read only the headlines in the newspaper rather than the whole thing. The U.S. data model gathers satellite based weather data at a resolution of 100 square miles over a period of 100 years. Other forecast models pick higher resolution data over a shorter time period; GEM uses data at a 10-square mile radius but only looks back 25 years.

A second contributing factor is the quality of the data utilized in the models. Historically all national weather forecast models only trust data from government owned and operated weather stations. One of the most accurate forecast models in my opinion was one developed by engineers at Weather Underground before they sold out to the Weather Channel; they trusted weather data from personal weather stations run by individuals all over the country. The National Weather Service used data from airports and less accurate satellite measurements over trusting the general public to gather the data. Unfortunately they are no longer accepting personal weather station data and their models are no different than nationally operated models.

Several years ago I experimented with my own weather forecast models and determined that most models are lacking one major factor. They all trust the math of the computer, and as computers have advanced, their rounding errors have gotten progressively worse. It seems they have forgotten the basic principles of the least significant digit and rounding correctly during weather forecast calculations. I suggest you look it up and see the impact for yourself, and next week I’ll share a short lesson with some examples of how significant digits can have a major impact on the final results of even a simple calculation.

Until next week stay safe and learn something new.

Scott Hamilton is an Expert in Emerging Technologies at ATOS and can be reached with questions and comments via email to shamilton@techshepherd.org or through his website at https://www.techshepherd.org.