“Speeding Up Matrix Multiplication”

Aay1373, CC BY-SA 4.0 <https://creativecommons.org/licenses/by-sa/4.0>, via Wikimedia Commons

By Scott Hamilton

Earlier this year a group of mathematicians at Johannes Kepler University Linz (JKU) made an interesting discovery improving the speed of matrix multiplication algorithms. Manuel Kauers and Isaac Wood submitted their paper titled “Consequences of the Moosbauer-Poole Algorithms,” building on the prior work of Moosbauer and Poole. The first thing I must do for you to understand the significance of this discovery is to explain a little about matrix multiplication and why it is important.

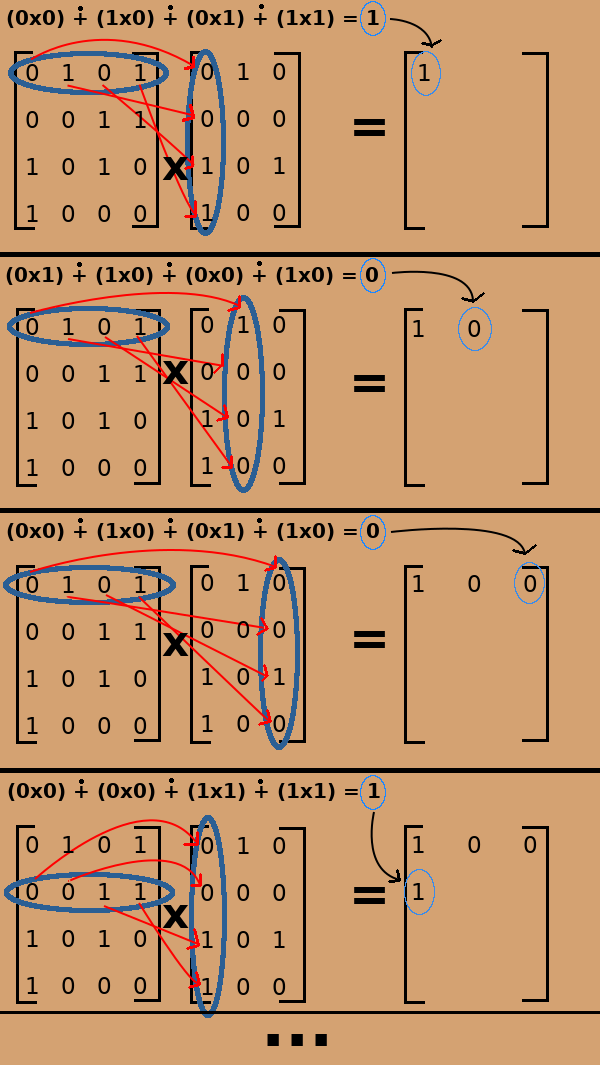

For those of you who do not know, a matrix is a special group of numbers arranged in rows and columns. You can think of it like a calendar, except the numbers in the squares are random instead of in number order. A matrix can be any rectangular size. When you multiply two matrices together, there are a couple of rules that must be followed. The first matrix has to have the same number of columns as there are rows in the second matrix. The resulting matrix has the same number of rows as the first matrix and the number of columns of the second matrix.

The process of multiplying matrices is used in linear algebra as one of the steps in solving large systems of equations. This is done for making weather forecasts, designing water and sewage systems, computing airflow around airplane wings and race car spoilers, among many other design aspects of mechanical and electrical systems. The fact that Kauers and Wood found a way to improve this computation by 15 percent is a fairly significant discovery that should have a major impact on science. However in the first month following the release of the paper and making their algorithm publicly available, they still had zero viewers of the algorithm.

I find it quite exciting, myself, that there are still new discoveries being made in the field of mathematics, as I always thought of it as a very limited field. Of course the more I learn about the field the more I realize that it is a very wide-spread field and worthy of more research. It is interesting that it was decades ago when Moosbauer and Poole discovered a formula for figuring out how many multiplications were necessary to multiply two matrices together. Surprisingly they were only able to predict it for perfect square matrices; no one has figured it out for rectangular matrices, at least not until the recent research by Kaures and Wood.

Moosbauer and Poole showed that multiplication of two 5×5 matrices required no more than 93 multiplications and a 6×6 matrix required no more than 153 multiplications. Taking these schemes as the starting point, Kauers and Wood found improved matrix multiplication schemes for various rectangular matrices using what they call a flip graph search. It has been more than half a century since the discovery of Strassen’s algorithm, which up until a few months ago was the fastest known method of multiplying two matrices, but we still do not know how many multiplications are necessary in the ground wiring for multiplying two rectangular matrices.

To try and simplify this new discovery as much as possible, they basically discovered that you could treat a matrix multiplication of a 3×3 matrix by a 3×4 matrix as two multiplications, one of a 3×3 matrix by a 3×3 matrix, and the multiplication of the same 3×3 matrix by a 3×1 matrix, to arrive at the same result. By using these block-wise methods they were able to discover short-cuts for multiplying certain rectangular matrices. This vastly reduces the number of necessary calculations and results in roughly 15 percent improvement in performance.

This research could lead to massive improvement in computation and could even result in a redesign of graphic processing units, which use these functions to compute the images displayed on your computer screen. Not only would this improve graphics capabilities of computers, but also speed up many of the artificial intelligence algorithms that depend heavily on this technology. We are living in exciting times where we have computers powerful enough to assist mathematicians in their research. We are making new discoveries in mathematics at a faster rate now than ever before. As computers become more complex, they help us to grasp a better understanding of mathematics and this results in new discoveries. If you have an interest in mathematics research, I highly recommend downloading a copy of SageMath and playing around with some of the more complex features.

Until next week, stay safe and learn something new.

Scott Hamilton is an Expert in Emerging Technologies at ATOS and can be reached with questions and comments via email to shamilton@techshepherd.org or through his website at https://www.techshepherd.org.